An entrepreneurial venture with a mission to empower rural women in Punjab, Pakistan

Founder and Trainer | Year: 2016-2018

In 2016, I started Mogul, a fashion house in headquartered in Lahore, which trained, employed 11 women to create haute couture designs for weddings and formal events.

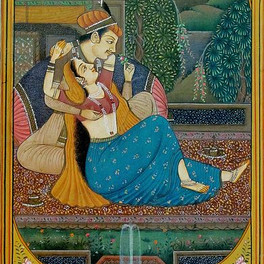

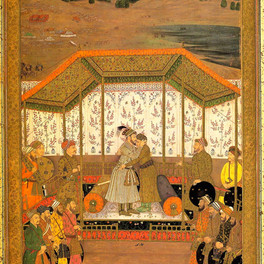

What made Mogul unique is its design philosophy, aesthetic and innovative fusions in terms of design.

With a team of 11 artists, Mogul’s mission is two fold: to create a name for itself amongst the top couturists in Indian and

Pakistani styles; and making an impact to the lives of the people it touches -- clients and employees alike.

Being Pakistan’s only fashion start up with an all-female work force, Mogul strived to create a positive change in the standard of living for the women in the country, offering opportunities to women

to work from their homes -- women who often struggled with financial independence due to lack of opportunities and accessible public spaces.

More often than not, in hyper-patriarchal countries, women are deemed more honourable if they remain indoors. Resultantly, many husbands and fathers forbid women and girls from leaving the house, and the darkest outcome of this phenomenon is their financial dependence and consequently, the abuse of human rights.

In starting Mogul, my vision and mission to employ women from the village my father was born in, I was required to not only acquire permission for the allowance of a month-long training, but also devise a system of vans which would pick-up and deliver the products at various stages to help let the women work from home.

The average weekly income of each woman was Rs. 20,000 (USD 150), which managed to help them have a standing in their communities, while providing for many household and educational expenses.

Saheefa, during her month long training at the Mogul studio, learning to tweak her embroidery skills with new techniques.

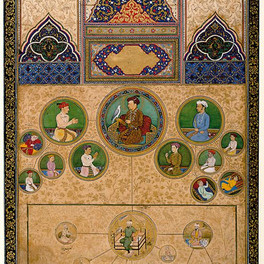

Cultural inspirations - The Mughal Era Art

Samples + Process